Full Visibility. Strong Protection. Real Control.

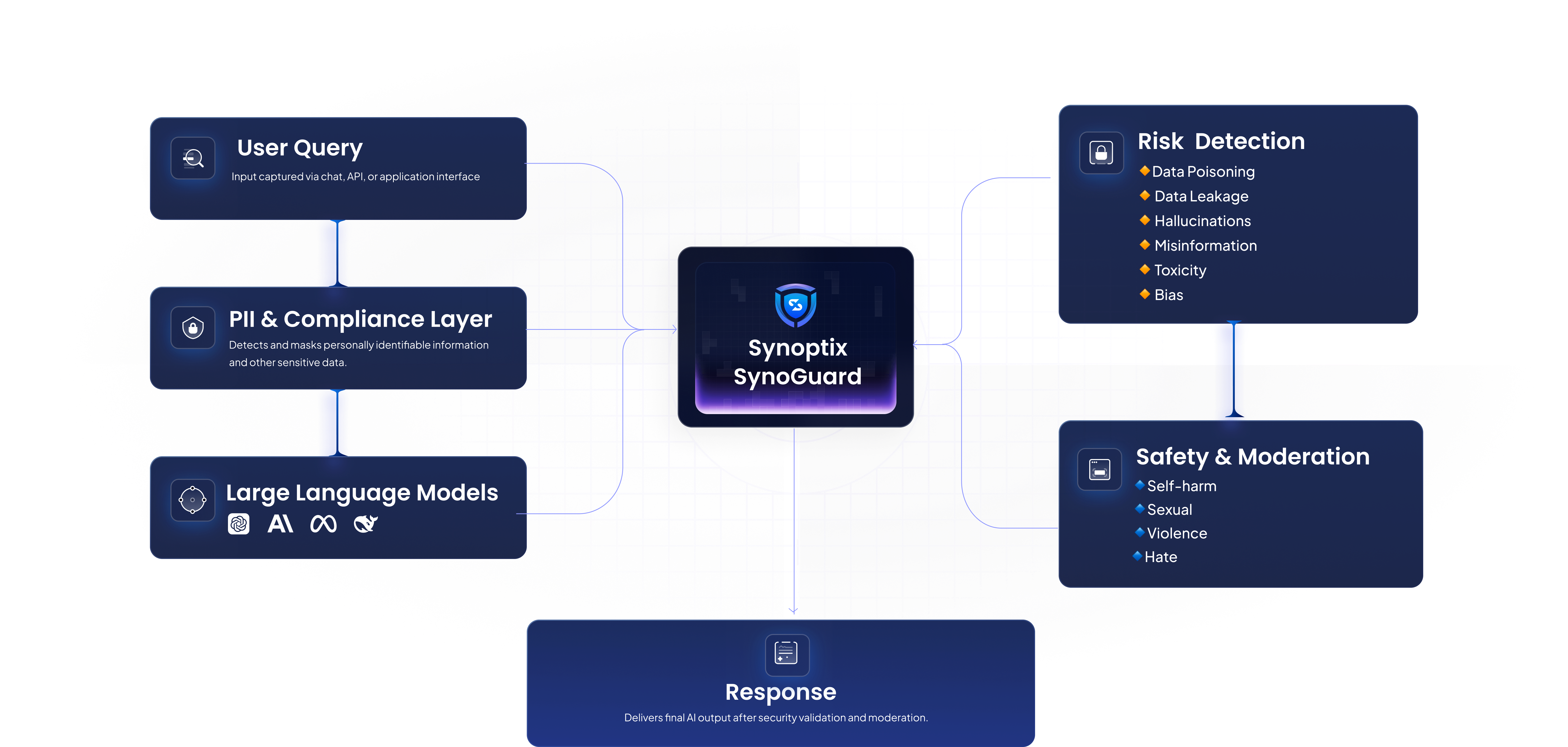

SynoGuard: Responsible AI Security Tool Built for Enterprise

Prompt Injection & Indirect Attack

Synoguard detects and flags attempts to bypass safety filters or manipulate instructions in harmful ways, keeping AI secure and aligned with approved behaviour.

PII Extraction & Detection

Detects and redacts personal or sensitive information in prompts and responses to protect your data. This includes emails, phone numbers, addresses, credit card numbers and passport details.

Toxicity Detection

Synoguard assesses the intensity of harmful or inappropriate behaviour present in user queries. This includes categories such as self-harm, sexual content, violence, and hate speech.

OWASP Top 10 for LLMs

Ensure your AI stays secure and compliant with SynoGuard. We continuously update your guardrails to align with the latest security standards, including the OWASP Top 10 for LLMs, so you’re always protected against emerging threats.

Attackers can craft prompts that override system behavior or extract sensitive data. SynoGuard inspects every input to detect and block manipulative prompts before they reach your AI—so the model always responds within safe, authorised boundaries.

AI systems can unintentionally leak private or restricted data. SynoGuard scans all inputs and outputs to prevent exposure of sensitive content, keeping your users privacy and your business data fully protected.

Third-party components and models can introduce hidden risks. SynoGuard audits your AI supply chain, checking model sources, datasets, and integrations for weaknesses, bias, or unsafe dependencies, before they impact performance or security.

Corrupted or biased training data can lead your AI to generate misleading or harmful responses. SynoGuard filters out low-quality or adversarial data sources during training and fine-tuning, keeping your model clean, accurate, and trustworthy.

Unchecked AI responses may be misused or cause harm if shared externally. SynoGuard validates every output in real time to ensure it meets your safety, compliance, and content standards before it ever leaves your system.

When an LLM can access tools, APIs, or plugins, it gains the power to act on its own. SynoGuard sets clear limits on what the model can do, monitors every action, and blocks any unauthorised or unsafe behaviour, keeping system control firmly in your hands.

If attackers gain visibility into system prompts, they can manipulate model behavior. SynoGuard keeps all system instructions secure and monitors for leakage risks, so the core of your AI logic stays protected and private.

Attackers may exploit embedded systems to inject harmful data or distort retrieval results. SynoGuard monitors your embeddings for anomalies, filters adversarial inputs, and ensures consistent, reliable semantic search performance.

AI-generated misinformation can harm brand credibility and decision-making. SynoGuard tracks response accuracy and context, alerting your team to misleading outputs and offering correction workflows to prevent reputational damage.

Some prompts or plugins can cause runaway compute usage or intentional slowdowns. SynoGuard watches for spikes in usage, throttles excessive activity, and helps you stay within performance and cost thresholds without compromising functionality.

Other Resources

Loading blog posts...